Stupid memory problems!

/ by Ton

The original title for this post was actually “Stupid Macs!”, but luckily this story has a happy ending, also for OSX. :)

As you all might have noticed by the lack of blog postings, since early february we’re making very long days to get the final scenes rendered. This was the first real stress test for the Blender Render recode project, and needless to say that our poor fellows suffered quite some crashing… luckily most bugs could be squeezed quickly. After all, this project is also to get Blender better, eh!

With scenes growing in complexity and memory usage – huge textures, lots of render layers, motion blur speed vectors, compositing – it also became frustrating complex to track down the last bugs… our render/composit department (Andy & Matt, both using Macs) was suffering weird crashes about every hour. It either was in OpenGL, or it were ‘memory return null’ errors.

At first I blamed the OpenGL drivers… since the recode, all buffers in Blender are floating point, and OpenGL draws float buffer updates while rendering in the output window. They are using ATIs… which are known to be picky for drawing in frontbuffer too.

While running Blender in debug mode and finally getting a crash, I discovered that memory allocation addresses were suspiciously growing into the 0xFFFFFFFF range, or in other words; the entire memory space was in use! Our systems have 2.5 GB memory, and this project was only allocating like 1.5 of it.

To my big dismay it appeared that OSX only assigns processes a memory space of 2 GB! Macs only use the 2nd half of the 32 bits 4 GB range… this I just couldn’t believe… it wouldn’t be even possible for the OS to swap out unused data segments while rendering (like texture maps or shadowbuffers).

After a lot of searching on the web I could find some confirmation on this. Photoshop and Shake both mention this limit (up to 1.7 gig is safe, above that Macs might crash). However; the Apple Developer website was mysteriously vague about this… stupid marketing people! :)

Now I can already see the Linuxers smirk! Yes indeed, doing renders on our Linux stations just went smooth and without problems. Linux starts with memory allocations somewhere in the lower half, and will easily address up to 3 GB or more.

Since our renderfarm sponsor also uses OSX servers, we still really had to find a solution. Luckily I could find the main reason for memory fragmentation quickly, which was in code calculating the vertex speed-vectors for the previous/next frame, needed for Vector Motion Blur. We’re already using databases of over 8 M vertices per scene, and calculating three of them, and then doing differences just left memory too fragmented.

Restructuring this code solved most of the fragmentation, so we could go happily back to work… I thought.

Today the crashing happened again though.. and also the render farm didn’t survive on all scenes we rendered. It appeared that our artists just can’t efficiently squeeze render jobs to use less than 1.5 GB… image quality demands you know. (Stupid artists! :).

So, about time to look at a different approach. Our webserver sysadm (thanks Marco!) advised me to check on ‘mmap’, a Unix facility to be able to map files on disk to memory. And even better, mmap supports an option to also use ‘virtual’ files (actually “/dev/zero”) which can be used in a program just like memory allocations.

And yes! The first mmap trials reveiled that OSX allocates these in the *lower* half of memory! And even better… mmap allocations just allow to address almost the entire 4 GB.

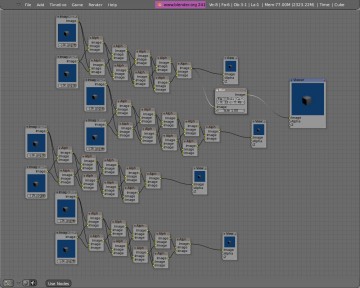

I added mmap in the compositor for testing, and created this gigantic edit tree using images of 64 MB memory each:

On my system, just 1 GB of memory, it uses almost 2.5 GB, and editing still is pretty responsive! Seems like this is an excellent way to allocate large data segments… and leave it to the OS to swap away the unused chunks.

Just a couple of hours ago I’ve committed this system integrated in our secure malloc system. While rendering now, all texture images, shadow buffers, render result buffers and compositing buffers are using mmap, with very nice stable and not-fragmented memory results. :)

Even better; with the current tile-based rendering pipeline, it’s an excellent way to balance memory to swap to disk when unused, to be able to render projects requiring quite some more memory than physically in your system.

For those who like code fragments;

Equivalent to a malloc:

memblock= mmap(0, len, PROT_READ|PROT_WRITE, MAP_SHARED|MAP_ANON, -1, 0);

And free:

munmap(memblock, len);

-Ton-

(Too bad I don’t have time to show these AWESOME images that are coming back from the renderfarm all the time… or to talk about the very cool Vector Blur we got now, or the redesigned rendering pipeline, our compositing system… etc etc. First get this darn movie ready!)

« tiny production report | A Call for Textures! »

Im first! YAY!

Ton! Stop teasing! Its not fair… you guys get to play with all those yummy sounding things….

This is so exiting!

Good Job! Well done to all of you!

( I love you ton ;) *sigh* )

Just one more thing…. Blender Rocks!!!!!

But why am I telling you guys this? You already know!

Just one question. Will this also come as an improvement in win32 systems?

I’m second! YAY!

I have tons of problems just getting the 2.41 version to even run on OSX, perhaps this update will help…?

Grood luck

Thanks for the example code:

I have always wondered how to use the disk as a way to efficiently swap out section of memory that you just dont need. And what is even cooler is that the OS does all of the tricky swapping algorithms for you!!!! (yeessss!)

Kind Regards

Simon Harvey

Ton, this is not just a Mac OS X problem, I got it also in Windows, when I got my 2GB of RAM and tried rendering a scene that loaded 1.5GB of RAM and it was fragmented. Blender crashed with a segfault, no other errors.

At first I thought it was the RAM that was faulty, but since it was an expensive set of RAM and hand tested by the dealer I decided to try it on Linux (at the time I had to use Windows for other reasons, but was scheduled to get back to my pretty Linux in a week or so, but it ended up being sooner, great), in Linux it was solid as a rock, I even duplicated the geometry and added more stuff to it and it just went fine.

While testing I also came up with some other nifty results.

In Linux memory usage dropped by ~200 MB, dropping from ~1.5 GB in Windows to ~1.2GB in Linux.

In Linux the render took ~5 sec less, from ~75 seconds to ~70 seconds.

In Linux the system remained responsive, while in Windows it was chocking.

All in all, if you want to use Blender, use it in Linux or Mac OS X as now it also seems good ;) .

— Rui —

While Windows doesn’t have mmap – an equivalent was pointed out on the list by lukep so by the time we do a release this memory issue should be solved on all platforms.

LetterRip

One reason again to use gnu/linux or any other OpenSource system!

Ton, i’m really impressed by your ability to debug this kind of flaws.

regards

-Omar

ton,

you know you could have solved all your problems by putting on your Vrooom jacket (like superman with his cape), and slapping the side of your computer…..duh!

glad your problems are fixed, smooth sailing from here on out!

good luck!

eric

Just out of curiosity, what OS do you Ton? From things I’ve read, it sounds like Linux is an imporant part of Blender development.

Richard,

Ton uses OS X. Linux is used by a large number of Blender users and gives the best performance of the three main platforms (OS X, Linux, Windows).

LetterRip

Thanks LetterRip.

Cool, I hate it when Memory Allocation goes screwy…

For Windows systems it seems that createFileMapping (http://msdn.microsoft.com/library/default.asp?url=/library/en-us/fileio/fs/createfilemapping.asp) gives a similar solution, so there is hope for Windows users, too.

/Nathan

NEW!!!! BLENDER CHALLENGE

Try and subdivide a mesh 24 times…

and win a free CRASH and/or HANG from your computer

TIP: Subdivide 2 linked vertices ;)

May the best Crash win

Pawprint,

19 subdivisions is 1 million edges and vertices if you start with a single edge

24 would be 32 million verts and edges, also during the subdivision there is a temporary huge increase in required ram (4 times the in use ram it looks like…)

LetterRip

Got mine to Subdivide 22 time…the 23rd one crashed Blender

Hehe…

For the peeps and Team who still looking for textures

check out…

http://www.texturewarehouse.com/gallery/index.php

Read the top off the page…sounds like any license we know?

mmmm?

Enjoy

Thank Ton for blog news after such a long delay. I understand that you are really quite busy these days.

I was just wondering when is scheduled release date. We can wait any longer :-). Anyway take your time needed for perfectionist result.

I have an idea whether you (Ton) do not think to use also your huge work on project Orange for educational purposes. Why not to write a book “Walk trough of Elephant dream movie – scene …X…”?

You could share your experience. We could try to look over your shoulders and learn how to make short scene of your film. Definitely Orange team now has tons of experiences, documents and resources to use them also to prepare such a book. Such a book would be also welcomed because Manual 2.3 is quite out dated. But it is just an idea.

It is just an idea. I do not know what other thinks.

Good luck

Jiri

I would buy the book :)

heh,heh, linuxer here that smiles :) big time… It’s not because of better mem handling, but mostly because of the “vague” support from the big-time companies. I just can’t get enough of the open-source community and its exceptional support. but you already know that, you’re part of it.

Apart from that, i would also like to wish the best and no more problems until the release!

Damn… only 22! I can’t subdivide 23 because the system run so slow. (linux+512ram)

:)

I can’t wait…

:D

Pawprint (a.k.a. Werner van Loggenberg):

It’s “like” a license we know, but with two additional (and unacceptable) restrictions: 1) The textures cannot be used for commercial purposes under that license, and 2) Any derivitive work must be licensed under the same (more restrictive) license.

(But this really belongs in that other thread…)

Ton:

Whoever thought up the idea of Project Orange to help improve the Blender Software was a genius! I’m flabbergasted to see the mountains of improvements in Blender in such a short period of time. Early one morning a few months ago I had imagined the theoretical idea of vector-based blurs to eliminate the need for multiple ‘tween renders, but dismissed it as too difficult to be practical. Next thing I know, I’m reading that you already implemented it! Amazing! And now this fix to address monster memory usage needed by “real artists”… Amazing… (sorry for the repetition. It’s the only word I can think of.)

What about the Tuhopuu GUI port? It is a long time I have no more news about it… :’-( Hope the new GUI has not been abandoned…

very impressive problemsolving!

claas

Hello Ton:

Where is the Blender 2.4 Guide? And, Where is the update for the Blender Gamekit?

An now ‘LET’S BLENDER THE WORLD!’

Hi

==========================================================

Tom Musgrove said on 17 Feb, 2006:

Richard,

Ton uses OS X. Linux is used by a large number of Blender users and gives the best performance of the three main platforms (OS X, Linux, Windows).

LetterRip

==========================================================

How I configure my Blender in Linux to do this? they are compiled? anybody think a tutorial to compile Blender Source?

since now, thanks

YAY!!! A Blog Update! Sorry about all of the problems you’ve been having, keep up the good work!

-Chris

Is the vector based motion blur in a test build yet? I jumped for joy when I heard you were developing this.

Renato,

[QUOTE]What about the Tuhopuu GUI port? It is a long time I have no more news about it… :’-( Hope the new GUI has not been abandoned…[/QUOTE]

Ton and Matt Ebb, the two key people behind the GUI port are both too busy with Orange, so expect to see it some time after Orange comes out.

unfear,

read the manual it explains how to compile the source.

http://mediawiki.blender.org/index.php/Manual/PartI/Compiling_the_sources

Blazer003,

it has been in the test builds for a week or so now. Go the blender testing forums to download.

Javier Reyes,

the guide is updated at the wiki

wiki.blender.org

the updated game documentation for the game engine is here

http://www.blender3d.org/cms/Documentation.628.0.html

LetterRip

knowsnuttin,

Ton thought up project Orange, and yes – he is a genius :) (but don’t let him know – otherwise his head might swell too much resulting in a burst :) ),

jiri,

[QUOTE]I was just wondering when is scheduled release date. [/QUOTE]

They recieved a month extension on funding, so probably a month later than the previous schedule.

Regarding a book – the DVD is also a documentary on the making of the film, a book would be a bit redundant.

LetterRip

mmap if it works so well be lazy.

Many translated programs have mmap.c files that translate from unix style mmap to win32 style. Make coding simpler. Most are taken from apache. One file in blender under apache licence if it makes it more stable quicker most likely the best way.

I am interested what effect has patch had on linux blender. Linux blender suffers from memory fragmentation over time. Ie it get slower does not stop. I wonder if this slows the fragmentation rate and keeps the performance faster for longer. Note the slow down is at max about 3 percent. If really good if the mmap stops this.

I was wondering bout all these improvements

???

have anyone ever played with ZBRUSH 2?

reason why i ask is this…in ZBRUSH you can sculp with

Z Spheres and then wrap(skin) a mesh around it.

(these Z Spheres act like bones to)

but what i noticed was that those ZSpheres looks alot like

Blenders ‘Armatures’ set to ‘Envelope’ in the Edit(F9) menu.

NOW…heres the thing, is it possible to write a script or make

Blender Wrap a ‘Skin’ around those ‘Enveloped’ Armatures?

NOTE: that each ball of the armature can be resized

Like ZBRUSH’s Z Spheres…

So you can in basicly model the Bone+Skin of a Character in one go.

Whould it be possible?

Use a 64-bit OS if 2 GB is not enough. You will simply postpone the problem with the dirty fixes you are proposing. Under NT, user code may only have 2 GB of address space (or 3 GB with the appropriate boot switch). There is another switch that allows 36-bit addressing, but ring ring 2006 is here and it says SWITCH TO 64-bit. :p

Windows 64 will nicely support large amount of memory allocation (I advise you to play with VirtualAlloc() and commit the memory as needed, CreateFileMapping() was made to share memory between processes and map files to memory).

If you need to address more than 2 GB, use 64-bit addressing (bis repetita). You’re trying to put an elephant into a phone booth.

Pawprint,

Blenders envelopes was inspired by zbrush – it would be possible to write code to do so, but no one has yet.

Autistor,

Blender has been 64 bit clean since before it became open source. However the render farm donated is OS X, and not on 64 bit hardware. So there was no option to switch to 64 bit hardware and software.

LetterRip

Autistor: Blender already had 64 bits support since 1999. It has been gathering dust though… with a lot of new code being added without proper 64 bits awareness.

We’ll definitely going to make the move to 64 bits this year. A proper migration test unfortunately not only requires the 64 bits OS, but also developers with > 5 GB memory in system to fully assure all allocations and pointers work as expected.

What you call ‘dirty fixes’ actually doesn’t seem to be so… also for 64 bits it has advantages to assign memory to be swappable first.

And last but not least; 64 bits isn’t a common platform yet.. our renderfarm sponsor for example still uses a 32 bits OS. So it’s stilll useful to find practical solutions for existing limitations.

I’m not saying what you’re doing is useless, I’m pointing out that if you or one of your users needs a lot of memory, the real answer is to upgrade the platform. I’m sorry to hear that you don’t have the hardware required for your tests.

If you ever find a 64-bit PC lying somewhere, know that you don’t need to have more than 4 GB of physical memory to test thoroughly the 64-bit support, thanks to virtual memory. The allocation of pointers is not your concern, it’s the concern of the VMM. Don’t worry it’ll work fine. ;) If you’re concerned about some “cast” issues, the 64-bit compilers generally warn you about that. Use VirtualAllocEx() to have addresses that would be invalid in a 32-bit environement (for testing purposes).

Don’t wait for 64-bit to be everywhere to catch up. And good luck. ;)

Oh Great, Thanks

this Sun sponsored workstation is going to be used to help the on-going efforts people elsewhere are doing to ensure 64bit cleanness .. after the movie is done. it’s a bit tricky with e.g. Library usage, ’cause .blend files are basically memory dumps, if you have data from both your local 64bit blend, but also link to data in other blends that have been saved by other Blenders in 32bit systems.

~Toni

Hi i find this topic from Blender Forum, in the Brezy Ubuntu with Blender 2.41 is the same thing? (right change the python 2.3 to 2.4)

http://www.blender3d.org/forum/viewtopic.php?p=48478&sid=611da4c3160064bba64ced3b1891c9e2

Awesome,,, can use even more memory now… Make faster make better..

One thing that bothers me now… It looks like Render passes was passed on since thing are coming out of the render farm now.. :( PLease someone pick this up when Orange is done.. Passes was going to be one of those HUGE features to give artist control of rendering and tests and so on..

Question about your Renderfarm:

How your .blends are distributed on your cluster? How is calculation in/from Blender managed?

I bet i missed the info here. :]

Man… I’ve been watching silently without responding (since most everyone said what I was going to say ;) ). Well you guys are doing a good job. No a great job:)

Uhh just one minor addition:) -Is the orange team going to do another project this big?

You guys make windows sound like it stinks. It works fine for me.

Autist0r, don’t you think if he were going 64bit, he would work on a platform that has _mature_ 64bit support? Like perhaps Linux, which has been running 64bit clean on amd64 for about 3 years.

Simon Harvey, that’s not quite what mmap() is for.

tripdragon,

they might be using the renderpasses currently – probably just need to change a #define in the source…

Omar,

[QUOTE]we are using an xseed, works nicely – and drqueue in the local lan at the studio. [/QUOTE]

according to antoni.

Johnathon,

[QUOTE]You guys make windows sound like it stinks. It works fine for me.[/QUOTE]

Windows has relatively poorer performance than linux and os x for Blender especially for renderfarm work. If you want the best Blender performance have linux compiled for your specific hardware, and then compile Blender for your specific hardware.

autist0r,

there is a 64 bit windows compile in the blender.org testing forums if you would like to use that.

LetterRip

I thought 32-bit machines had a limit of 4GB, not 2GB. I know that OS X has some in built process limitations that are used to prevent fork-bombing attempts and Linux sometimes has these off by default. This is probably not what’s going on here though. Maybe check out the default system limits in sysctl or these:

http://www.hmug.org/man/2/setrlimit.php

http://www.osxfaq.com/man/2/brk.ws

I heard of the 2GB limit elsewhere and I’m not sure if this is what mmap does but for Windows, you can use a Ram disk:

“The other consideration to memory is the maximum physical amount allowed by the motherboard. In Windows a single process as executed from the CPU can use a maximum of 2gb of memory by default. This can be upped to 3gb with a known Windows hack, but this is not recommended for stability. This is where a RAM disk comes into play.

RAM Disk:

RAM disk is a virtual disk drive created entirely out of RAM. A RAM disk is given a drive letter specified by the user, and the operating system will see the RAM disk as yet other hard drive. RAM disks will work exactly like a hard drive except that it is many times faster and all its data is lost every time the operating system reboots. The benefit to using a RAM disk is that you can easily use this technique as a trick to bypass the 2gb process limit of Windows if software caches its data to this drive. It is also a trick for bypassing the 4gb memory limit imposed by 32bit CPUs. The only maximum limit to a RAM disk is the amount of memory that can be accessed across the motherboard.”

from this site: http://www.createdbycheney.com/testb/tutorials/hardware1.html

And yeah, Linux will always be faster than OS X because Linux uses a monolithic kernel as opposed to a microkernel. That was a design choice.

Ton & crew,

When this project is over, I will be willing to give up my AMD64 3800 + req 5G mem. To help work out 64bit awareness issues. If that would be a help. We’ve all got to pitch in what we can after all.

PS. Just receiving the final parts for a new system. So it will be an extra machine very soon.

One thing to remember. OSX until 10.4 has not been a 64-bit OS. 10.2 was all 32bit. 10.3 had a 64bit kernel with a 32bit app layer. So the kernel could use 64bits, and each program could have it’s own 2GB of memory but each app could have a max of 2GB. From my understanding, 10.4 was the first version to have full 64bit.

And one other thing to remember when commenting about mmap, we can’t just throw out the 32-bit community. At least not for annother 5 years.

tbalbridge,

Ton attempted to port Blender to 64 bit OSX 10.4. Turns out you can only run console apps in 64 bit, there’s no support for OpenGL, Carbon, Quicktime, .. .

(insert extreame sarcasm) Gotta love Macs.

Let’s all do ourselves a favor and go to Linux.

Hey…what if you ran Linux on the Mac renderfarm….

Joking, joking

Just a question about the Xseed, with that cluster does blender use all the power of the renderfarm to render each frame -that’s multiply the processing power as it is one only machine-; or does it render in a frame-per-node basis?

I think Xseed is not a clustering system but the name that they give to their network; how have them implemented it? can it be implementes in Linux or just Mac??

O.K does anyone know how to got this to work. I spent a long time trying to get the space ship to work. For some reason the animation I made of the space ship going in to space does not play when I play the game. Tell me if it works on yours computer.

Here it is http://www.ninjabuddy.org/Problem.zip

I e-mailed Ton a while back about some design issues with the renderer, and he stated that they are doing a 1 frame per node system. He said that they were getting about 1 hour per frame. So it wouldn’t be that bad.

Really, the only reason you would need more than one node per frame , is when tne number of frames you are rendering is less than the number of nodes in the farm

[Quote]

Really, the only reason you would need more than one node per frame , is when tne number of frames you are rendering is less than the number of nodes in the farm

[/Quote]

That’s not *entirely* true.

1. If a node goes down in the middle of processing, you may have to re-run that entire chunk of work (ie re-render the entire frame). Dividing the task into smaller chunks reduces the loss, (and hence potentially duplicated work).

2. A parallel system tends to run as a single stream at the end of the run (some node ends up running after all the others have finished). This single-streaming could last for any amount of time up to the time of a full chunk of work (that last node may have started when all the others had just about finished). The greater the variation in the complexity of the individual chunks (frames), the more likely this is. So, the smaller the work chunks, the shorter the possible time that the render-farm can run on a single node – but the greater the per-chunk overheads.

Just some observations.

BTW: The new work that you are describing sounds awesome!

Cheers!

Nik.

I think that it could be great if blender could render not in a frame-per-node basis; I know it is possible to make a cluster for blender in linux using OpenMOSIX, but again it works in a frame-per-node basis, in this case if you have an anim with 250 frames and 5 nodes, then you should manually (or with an external script) split the file (or settings in the command line rendering) in five so the first render frames 1-50, the second render frames 51-100, ….

then launching the five renderings at the same time, openmosix will distribute it automaticly (in a process basis). but once again if it take 1 hour per frame and the power goes does at minute 45 (for example) you lose all the 5 frames, and have to re-render all of them (another hour to get those same 5 frames), so if it could render as a all, each frame will (supposly) take only 12 min. (and if the power goes down at minute 45, you only lose 2 frames); this solution could also be great in a network that don’t have a fixed number of nodes (nodes entering and leaving at anytime). Saying all this, I must admit that I don’t know if that is possible at all.

To the Orange team: more 3 weeks to the online release isn’t it?? can’t wait anymore :P, don’t be to nervous with the deadline, I’m sure you guys will handle it just fine :D

I really hope that we will soon see in the web lot’s of tut about animation (since they don’t seem to be a lot for now); speaking of that, one of the best tut about the new armature functions -particulary the stride and how to setup a walkcycle-) can be found here:

http://www.telusplanet.net/public/kugyelka/blender/tutorials/stride/stride.html

Hi there! Congratulations for the excelent work, it looks Marvelous and I can’t wait to see the rest :D

Here is my help for the team, I found a site that has some nice textures and they are for free, Take a look!

site:

http://www.mayang.com/textures/

have a nice day!

Glad to see your working out the technical problems but come on please post more art! Even concept sketches would be cool, please????

It is a little bit off-topic… But I’m searching for those links mentioned by Ton (Photoshop and Shake about the 1.7GB limit…).

Can someone give me that links? Thank you.

Yep, blender crashes the 22nd time I subdivide a segment. I’m suprised that I couldn’t get it to go further with 2gb of ram! But this problem is fixed now, so its all good :D

tbaldridge: erm, I mailed that “no frame took longer than 1 hour”. The average is quite less. Don’t have the latest stats though, we’ll post that info too, later. :)

hey Linux-lovers and Windows-worshippers ! Blender started on Irix. Irix has been 64-bit for way over ten years. You guys are more than a little bit behind the times ….

Today is D Day for the Orange Team!

Congratulations to Ton and the whole team for the awesome job done these last months.

Thank you also for coding ever amazing new tools for the community!

I would have liked to be with you at the première! I’m sure that it will be a great moment!

Philippe.

caliper digital can solve yhis problems 8-)

2 gig of memory!?! i don’t do too much advanced stuff but that seems like alot even for a program like blender. did you all rember to decimate your meshes?

blender way aherd of the compition in hardware eficency, blender will full texture in realtime(sort of) while Maya lags up a pentium four with 2 gig of ram just to display a shadeing preview, of a single mesh. score one for open sorce!

Baaman, yes 2 gigs of memory, whats so strange?

caliper digital is much more easy to use ;))

bit late to be adding comments but oh well

about mmap, on linux malloc will use mmap all on it’s own if apropriate… at least glibc malloc will. ( think it’s for large memory blocks)

http://www.gnu.org/software/libc/manual/html_node/Efficiency-and-Malloc.html

I don’t understand too much of all this. I’m just getting into compositing and somehow, through the days browsing, came here through open source..

I just want to say I’m impressed by what you do, and all the testing work.