New Mini Production report

/ by Bassam

Well, feels like it’s about time to update everyone on how we are doing, as we get into the later part of the project. Ton told you all about the extension we got, so I’ll fill you in on where we are now, and what we’ll be doing in the remaining time.

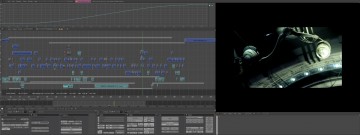

We’ve animated 92 shots of our 130 total. The live edit image above shows the progress- the top “animatic” strips are almost gone- the rest is all production files. Of those scenes some are “timing complete” meaning main character and environment animation are done, and these scenes are ready for the Sound FX. Scene 4 already has a gorgeous sound track done by Jan, and he has scene 5 in his grubby little mitts… we can’t wait to hear what he does with it :) scene 6 is next; it is shot complete, minus final tweaks.

The live edit is a massive help. We drop completed and in progress shots into a directory structure with a .blend sequence edit sitting on top ( using relative paths) Thanks to the patch from Peter Schlaile, the sequencer is almost a realtime editing system now; it never (at the half HD res we currently have it at) consumes more than 90 megs of memory, doesn’t need to “cache” images before playback, and sports quite a few optimizations and improvements. Peter himself used the sequencer to edit a 2 hour movie, something that would have been next to impossible in the past. With the adition of the ffmpeg support, this should make blender quite a viable NLE, especially for us linuxers :) You may notice from the live edit that the movie is getting longer than the animatic; the more we finish the longer the movie gets! The animatic ran at slightly under 7 minutes total. The current live edit is about 8 minutes and 8 seconds.

The live edit is a massive help. We drop completed and in progress shots into a directory structure with a .blend sequence edit sitting on top ( using relative paths) Thanks to the patch from Peter Schlaile, the sequencer is almost a realtime editing system now; it never (at the half HD res we currently have it at) consumes more than 90 megs of memory, doesn’t need to “cache” images before playback, and sports quite a few optimizations and improvements. Peter himself used the sequencer to edit a 2 hour movie, something that would have been next to impossible in the past. With the adition of the ffmpeg support, this should make blender quite a viable NLE, especially for us linuxers :) You may notice from the live edit that the movie is getting longer than the animatic; the more we finish the longer the movie gets! The animatic ran at slightly under 7 minutes total. The current live edit is about 8 minutes and 8 seconds.

As to what we use the live edit for, well:

a – as we replace open gl previews with renders and final renders, it slowly “becomes” the movie- editing started before rendering did :)

b – at the final hour, we’ll drop in the full rez frames in the same directory structure, and the live edit .blend becomes sort of an EDL, to render out the full rez movie.

c – most importantly, it really streamlines the process of working… previous posters on the blog have asked how/why we split shots into blend files, and what that does to editing a continuous piece of animation. There are several consequtive shots in the movie that span the same bit of dialog or action; with cuts on action to the characters in long/medium or closeup. The live edit lets two, sometimes three animators to work on consequtive shots and still sync their styles and the motion across the cut, that it appears completely continuous. It’s quite amazing to see, even for us :)

d- last but not least, Jan needs the edit to do the sound for the movie :)

So, what will go on in the next few weeks?

We’ve got a short hiatus while we finalize the designs for scenes 7,8 and 1 (two extremely short scenes and one long one) and at the same time, push the scenes we’ve done and the characters to final status (this means animation, texturing, and rigging completion).

For Matt this means heaps of UV unwrapping in addition to modelling and design for the last two scenes, after which he’ll be both animating and working towards final textureing and lighting.

Ton is working hard both as a producer and coder; he got us the extension for the project time, now he’s doing tasks that range from finding a venue for the premier to making sure we have a place to live for the extra time. As a coder, he recently ripped out and rebuilt blender’s rendering internals, and added the icing of a node based compositor; a truly amazing feat of programming in such a short period of time.

Andy will be working towards the final renders; in general, this means making the movie look awesome (but don’t tell him that; he’s quite shy) He’ll be doing the arduous task of painting textures, setting up lights, design work, and using and debugging the Noodly compositor.

Basse is tired, and going home right now; but when he’s here he does both animation and set design, and continues to produce heaps of mechanical monsters.

Between games of pool trips to the red light district, Lee will be putting the final animated polish on scenes 2 and 3, before he starts on scene 7. (okay, I’m kidding about the red light district- Lee still believes it’s the site of Amsterdam’s clothes-dying industry)

I will be doing the dozens of shapes driven by bones to fix the deformations of the characters (this includes each joint of each finger, for instance) I’ll finalize the char rigs and cloth rigs, and assist Toni dropping in the scripted environment animations for scene 2. Hopefully I’ll be done by the end of the week, and be able to get back to animating.

After this week, we’ll be working half on animating and half on getting final renders through the pipeline… there’s lots of interesting details on that, but I feel like I’ve typed way too much (and barely scratched the surface) I’ll leave it to the rest to fill you in on the really cool stuff happening to blender and the project (like the noodly compositor!) and I’ll leave you with a little screenie of the typical animator’s desktop at the project, and settle in for a nice Saturday evening’s work ;)

« A Call for Subtitles | Behind the scenes, shots and, er… files »

Everything looks incredible.. my expectations keep rising and rising – once again, i still can’t wait! (Surely you all deserve a weekend off!)

Hello

and thak you to you and all the others to take the time to make these little technical posts

Useful and interesting

Hey, that’s SO cool. You’re making blender a NLE system, a compositing tool…

There is one thing I’m concerned about: After you finished the movie – please don’t just take home the knowledge you won. We’re all so eager to hear about the way you used blender und made the movie. Take time to write down stuff, exactly as you did right here. Just a bit more, please. :-P

Thanx for your excitement and work!

I am corresponding with David 100%. You should write down all your experience. And why not in a book?

“The animatic ran at slightly under 7 minutes total. The current live edit is about 8 minutes and 8 seconds.”

Quiet short imho :/ How long should it be in the end?

Wow. It’s looking quite nice.:-) It’s good to hear that it’s a bit longer than the original animatic. ( More frames of blended goodness to gawk at.;-) ) Keep it up, Orange team.:-)

Well, our original plan was for 12 minutes.. but more recently we decided to go for 7-8 minutes. Keep in mind that the “recommended” length for shorts – at least according to some sources is 6 and a half minutes.

Of course, as you said, this is all a matter of opinion :). I don’t know how long the final will be exactly- I wouldn’t be surprised if it reached 10 minutes, but I really hope it doesn’t- there’s a limit to how well and how fast we can work and get a cohesive result within our deadline, and I don’t want to exceed it.

So besides the character rigs you’re using cloth rigs. That means that you animate the characther and then hand animate the cloths too, right!? Don’t you use any softbody dynamics for the cloths?

And I were thinking to buy a comercial NLE program… pfuu!! How fullish I were ^_^

Is most of the NLE stuff still in the orange branch?

Because if not, we’re going to need some tutorials or something to show how to use this new tool…

Hallo Orange Team!

…Thanks again for this Post”Orange Project” Progress Informations ….!Looks very Interesting…!

Blender is going every Day Better and better..more new Features added!Great!

i also agree with David 100%!

seeYa

and all the BEST!

That is so COOL!

It looks just lick it is REAL!

Wow cool yay you deserve a weekend

For sour.

Argh, they’re using my audio code. Please not! It wasn’t supposed to be *used* in production! It is just another nightly hack! ;)

The NLE stuff is a patch in the patch tracker, it should be committed ‘soonish’.

LetterRip

A technical question, you guys: how do you manage to get a smooth playback in the sequencer in order to make editing comfortable?

I suppose the source frames you retrieve in blender to make the editing in the sequencer are final-resolution ones….thus require heavy power to move them even if you set the window preview resolution low….and talking ’bout this….what resolution do you use for preview within the sequencer? Did I understand correctly half the full-source resolution?

And now a point to Bassam: Quoting: “With the adition of the ffmpeg support, this should make blender quite a viable NLE, especially for us linuxers :)”

—Well….a true NLE environment requires «as a must» two preview windows instead of one: first one for source material, second one for final edit preview….So: are there plans to implement both source/monitor preview windows in future versions of Blender? That would make it become a *real*, *free* and *professional* NLE alternative to its W*nd*w$ counterparts like, say, Premiere or Avid systems….

One more thing: Could you develop a little bit more the explanation about “Thanks to the patch from Peter Schlaile, the sequencer is almost a realtime editing system now”. Wow…sounds good…and: what is Schlaile’s patch all about? In what does it consist?

A final note: as David says….we all Elephant’s Dream fans are really *eager* to get the full details -technical or not- of every step of making this film….so….I agree with Carlinhos in….why not in a book? (or a free e-book, in order to ‘close the circle of freedom’) ;-)

Sorry for my long writing….these are things that happen when you get fans *r-e-a-l-l-y* interested in what you are doing.

Cheers,

Juan

(Madrid, SPAIN)

In resonse to those asking for the details of making the movie, I was under the impression that the Orange DVD will include all the files used to make the movie, as well as a making of.

@JuanJavier (regarding Peter Schlaile’s patch):

https://projects.blender.org/tracker/index.php?func=detail&aid=3790&group_id=9&atid=127

I was invited to an Orange dinner last friday and saw the (what they call) live-edit.

Unfortunatly I forgot my camera at the restaurant so I can’t show you readers the black eyes and the coffee stains just yet…

* Spoilers warning *

The edit looks remarkably good, edit wise. The timing looks very nice, even without sound. I think the part with the … mmmm, okay I’ll put this in a mail.

* — *

It was good to see the Orange guys all happy, and very confidend. I think they’ve grown into a great team. The blogging and openess is still an area of discussion. Everybody wants this project to be total open, but ofcourse it’s not a project on it’s own altough it could contain that much work. A blogging takes easy 1/2 hour of not animating. Same goes for “the making of”. At some point 6 months of the lives of 8 people are going to be condenced into 16Gb…..

JuanJavier – very interesting questions :)

I don’t pretend to be an expert on NLE’s ; the only one I’ve been even close to profficient on is Premier- which is considered by most to be midrange rather than pro- before then I used (super VHS and 3/4 editing systems) video, which of course is “linear”

I’ve never really understood why I need two monitors on an NLE; for video of course, this is essential, but that’s a different system. for the most part I just ignore one of them in a computer- I tended to treat the feature largely as a throwback to the old days of analog video editing- but I’m probably wrong, and it probably has to do with a different workflow than mine.

You can of course have as many “monitors” in blender as you want, but they only show the output ..

But by useable NLE I mean:

multitrack editor, with transitions, effects/filters, support for at least some useable codecs (thru ffmpeg) decent speed/performance (realtime probably at lower resolutions) smart memory consumption and scaleable to long edits. It’s also fairly stable, and you can use the .blend with relative paths as a sort of edit decision list – i.e. if you replace low res files with higher res ones in the same path, you can then render a high quality movie that you edited in real time at a lower quality..quite handy!

Bellorum has the link to the patch already – I hope it gets included in blender before too long.

Alexander: we do encounter some rather strange things with blender audio, but it’s use-able at least- kind of a double edged sword, since you don’t get any bug reports from us because it works, but sometimes things don’t work, or work in a way we don’t fully understand. But I’m not really complaining- it’s crucial to the project- we couldn’t really animate without it.

Wow i love the new features this project brings into blender.

Thx to Ton an the whole coder team. Hope this features will be soon integrated into blender official release.

PS/OT: What’s about the subtitles, think there are lots of people in blender community who’d like to support you with the translation.

another OT: Will there be a Utah-teapot in the movie as a kind of running gag like in many other computer animation movies? would be quiet cool ^^

No no no…Not the Teapot! Suzanne!

buergi.. yeah, subtitles will needed, and I think there will be a call for participation when we’re ready . As to a Utah teapot.. well, we have plans :) not exactly a teapot, though….

Bassam: ah great ^^ i’m impatient to get this DVD.

JuanJavier: Concerning your “dual video monitor” comment – you can monitor source material instead of the final output at any time by LMB-dragging over a clip, shouldn’t you have known already.

Bassam: If you have any questions or anything particularly annoying in the audio code, I might try to explain or fix.

One word…

Wauuuuuuuuuuu….!!!

I hope see this soon…

well, something weird that we seem to see: If you mixdown and render from a blendfile, then add both the sound and video clips to the sequence editor, the sound clip is 1 frame shorter (always? at least in our case) . seems to line up ok if you line them up from the end of the clips, but it’s a bit of a puzzler.

oh, and not a blender thing neccessarily , but a linux alsa thing: we’ve got sound cards that can do hardware mixing, but blender being OSSish (I’m guessing) still hogs the dsp.. other applications still get sounds, but not other blender instances. Is there any flag or command we can use to make this work (short of running OSS – I’m not even sure there are drivers for our hardware). It’s not a big deal (just for me, since I have the live-edit and my current shot loaded all the time at the same time)

Blender is surely beginning to turn into something marvellous. I reckon it’s time to consider dropping the game engine out of blender. I don’t know about others, but I think it’s just something that takes away the “pro feeling” that blender has been growing for some time now. Risking going too off topic, I just had to mention this, as I feel the time is coming :)

As allready statet, this is looking great! Since my pay check arrived the other day, time to order the dvd :)

Greets

Richard Olsson

Sweden

Richard Olsson: Blender 2.41 has just been released! There have been enhancements made to the game engine so, it’s still being developed. Check it out.

Dropping the game engine out of blender!!!!

What are you thinking the game engine is the best

Part!!!

I think even if i don’t use the game engine much, because i think it’s a bit uncomfortable, i agree with Timothy that the game-engine is an important part of blender, perhaps not for everybody, but there are many blender users who use it.

So if there is such a great feature, why dropping it out???

I’ve edited a great deal with analog video systems and never understood the two monitor editing other then it was technicly not easy to hook one monitor to the multiple (3) video machines.

For life action it’s good to see all the sources, but for non-liniar editing it’s not very usefull. As far as I know film editing does not have two projectors…

Bassam: Oh, yeah, the first thing sounds like a typical off-by-one error. It’s probably an easy fix – just need to find where it’s actually off…

Concerning the Alsa/OSS thing: I’m still running OSS, because I simply see no reason for switching to Alsa. Maybe it’s better, but my very simple sound hardware (SB PCI 128) is supported perfectly by OSS. You’re right though, multiple applications can’t use the same sound device easily.

I wouldn’t know how to fix the latter…

The game engine will evolve (it already has) and become quite a tool.

I’ll personnally use it for product presentation and walkthroughs like no vrml or x3d will allow.

You see, there is more to Blender than stills and movies.

Erwin has the intention of making it a plugin (the API could be used by other GEs as well).

Good continuation to the Orange team and thanks to Bassam for taking some time out of his busy schedule to give us some news. :)

Jean

Hello everybody, and project orange’s members !

i’m very surprised by the new Blender…!!! :-) it’s good for all blenderians !!!

i want to sell your Orange project’s DVD but i dons’t understand very weel spoken english… :-)

i want to know if you put subtitles in your Orange project’s DVD !??? my language is : French and portuguese… but if you put english subtitles it’s better that nothing… :-)

Thank for your response and to read my message,

Severino, one new blenderian…

is Peter Schlaile’s patch in the orange tree? or do you still have to patch it your self? hate to bitch…. but i though that the orange team were ment to be using a straight CVS that was currently availible to everyone with out needing to patch it your self.

This whole project is really great. I can’t wait to see the final thing. Also, it’ll be awesome to see all of these features spill over to the public release.

Being a film guy, I can appreciate all the fun you guys are having. Keep it up, Orange Team!!

***HAPPY BIRTHDAY LEE***

>>>>>HAPPY-BIRTHDAY>>>>>>>To You>>>>HAPPY-BIRTHDAY>>>>>>>To You>HAPPY BIRTHDAY To Lee>>>>HAPPY-BIRTHDAY>>>>>>>To You!!!

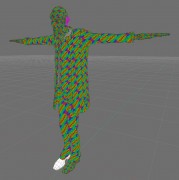

Why are you using this strange xor pattern for uv mapping?

Question about the compositor:

Can it be used to make non-CG films/videos, like if I just want to color correct my live-action (non-CG) film? Will it have key frames (ie: animated color-correction)? What about blue/green screen compositing, or matte generation? Will it include all compositing functions (add, subtract, multiply, divide, inverse, max, min, blur, curves, etc).? Can a group of nodes be combined into one node, like the meta-strips in the sequencer, so that it would be possible to create a blue screen compositing node?

Please say yes, yes, maybe, yes, and yes.

rafster,

yes – although it may not have everything on your list the first release. Color correction on a film instead of pure CG – sure. Greenscreen – likely not 2.42, compositing most i think are in already, group nodes are already in.

LetterRip

Oh, I should have made myself clearer. By saying “dropping the engine OUT OF blender” I didn’t mean lose the development alltogether. But rather not force me who while doing mostly game related 3d work don’t use the engine to have it molded into my beloved blender copy.

I would much rather see the BGE as a stand-alone module or plugin if you will, as stated by someone above. I just don’t see a reason to have a 3d editing, animation and video compositing software with an integrated game engine (!) At least not for me (and I’m guessing many others with me) who model for an “inhouse” engine or more as an artform.

But nonetheless. Orange sure rocks the blender community.

The ffmpeg integration is fantastic, works perfectly on Ubuntu Breezy PPC. Blender has become the only usable DV-editor for linux PPC (other packages rely too much on libdv, very slow with endian issues – cinelerra, pitivi, diva and to some extent kino)

Peter Schlaile’s patch does not apply cleanly against cvs any longer (since orange merge with head), do you oranges have a more recent one? (would be nice to try it out with nodes compositing)

I thought if you set up alsa with dmix this should work:

export SDL_AUDIODRIVER=alsa

export AUDIODEV=default

start with:

blender -g noaudio

but it’s like blender refuses to take in the SDL environment vars.

levon: the orange branch only existed for as long as it did during the feature freeze for the 2.40 and 2.41 releases; orange is “dead” now and we are back to using the merged bf cvs.

Peter’s patch is pending review by the developers, and is freely available on the patch tracker (where I got it) to apply to your local build. I just keep a “special” patched blender binary now for sequence editing. I imagine the next release will have all or some parts of the patch.

Pitel: that pattern is available from the uv image window, the purpose of it is to provide a high contrast pattern that allows the uv unwrapper to quickly diagnose stretching or distortion problems in the unwrap. It’s ugly, but in practice more effective than a black and white checker.

anders_gud: I guess since I applied the patch a while ago I didn’t have a problem.. it’s always possible to rewind cvs when you do a checkout if you want to use the patch. In my case (ubuntu breezy too!) I disabled the ffmpeg stuff from compiling because a- I don’t need it and b- I didn’t want the headache of figuring out the exact right versions of the ffmpeg libraries needed for it to work ( It seems the ffmpeg package occasionaly changes api between releases)

oh, and we’re not using dmix; the sound cards have hardware mixing. I tried dmix too, but still got no joy :/

Bassam:

Im using it now:-)

No headache – the ffmpeg cvs20050918-4ubuntu1 package works fine

Yeah why sound should be so hard on linux beats me…

The only issue with sound now for me is if I change mixrate to 48 kHz I get no sound output…. on a powerbook Ti

hehehehe …. buahahaha …

U seem like making a good movie … even not “good for blender” .. just good … so … good luck =)

hey cheers for the birthday wishes guys, had no idea people would realize haha =D

WOW! This looks really great. Thanks for taking the time to write this report Bassam.

Blender really is becoming a multi-purpose tool!

Hey Lee, I forgot about it, hehe

Happy Birthday

Seems like there will be a party and no work today …

— Rui —

Quote the great Bassam: “I’ve never really understood why I need two monitors on an NLE; for video of course, this is essential, but that’s a different system.”

I totally Agree. For Video Editing i use Vegas Video by Sony, and it uses only one monitor for the preview, very cool workflow.

Quote: “multitrack editor, with transitions, effects/filters, support for at least some useable codecs (thru ffmpeg) decent speed/performance (realtime probably at lower resolutions) smart memory consumption and scaleable to long edits. ”

Hey Guys, fantastic!

If Blender will be a thing like this one, it will be a dream!

Quote : “if you replace low res files with higher res ones in the same path, you can then render a high quality movie that you edited in real time at a lower quality..quite handy!”

This is like an offline edit with a proxy source (normally DV quality clips of the originals). Very useful indeed!

Thanks for sharing Bassam, and thanks a lot to all the crew there! ;-)

Whew. I’ve been looking for a ‘useable’ opensource NLE Editor that will run on my Machine. looks like I’ll only have to wait a few more weeks! (Well, at least until 2.42 comes out…)

Also, are all these new features gettings documented? If so, where?

I’ve been following orange for a while as a 3d artist. Now it’s started to drift into my day job, haha. =)

I’m an editor by day, and it’s interesting to see some of these things pop up in blender. It’s not something I would edit on (far too used to commercial software like FCP and Avid), but I think it’s a great thing to have around for those of us not lucky enough to have several hundred thousand dollars of editing machines where they work. =D

I wish you guys all of the best, like everybody else here. Show everybody that open source software doesn’t mean it’s only for people who can’t afford commercial programs. I stopped using my XSI license right around the time when the 2.4 betas of blender started surprising the crap out of me. =D

are you guys actualy aware of what you do for blender?

not only is the coding of blender recently more than amazing

and making people aware of it, but also the movie project itself,

testing how far blender can be pushed and used for larger projects,

and also producing a quality feature film makes people mind more

and more understanding that blender is on the step to be mature

and so being considered a serious 3d tool.

but well i guess you guys know that anyway already!

great work!

claas

This is a brilliant example of not “under-estimating” what a free product like blender can do. Great stuff Orange :D

Keep up the great work Orange team. As an Avid Pro editor I find that I mostly use 1 vision screen and switch between the master vision and the source. BUT 2 screens are very usefull for trimming your edits and if you cant do a incoming/outgoing trim or a slip/slide trim (4 windows!) then you really are missing a very usefull tool.

I’d rather be Blending than Aviding!

D

[Quoting what Myster_EE said on 31 Jan, 2006]:

Whew. I’ve been looking for a ‘useable’ opensource NLE Editor that will run on my Machine. looks like I’ll only have to wait a few more weeks! (Well, at least until 2.42 comes out…)

–Any special reason? (just wondering….)

[Quoting what David said on 31 Jan, 2006]:

(…). As an Avid Pro editor I find that I mostly use 1 vision screen and switch between the master vision and the source. BUT 2 screens are very usefull for trimming your edits and if you cant do a incoming/outgoing trim or a slip/slide trim (4 windows!) then you really are missing a very usefull tool.

—That was exactly what I meant to say. I myself however have been used to dual source/monitor editing, back since the premiere 5.0 days; and up until recently with the Avid DV thing.

Although several weight reasons have been posted here on behalf of single monitor editing, I myself still would be more comfortable if Blender would support dual. I am sure many video editors would agree w/me. That way, the Blender integration of *professional* video editing and *professional* 3D modelling-animating tool would make it touch the sky -literally- regarding multi-purpose film/video production suites. It would surely outplay many other commercial alternatives (if not *all* of them).

Just my 2c.

thanks to everyone

Juan Javier.

Y know that this question is not about the NLA editing but since you guis are using linux at the studio can you tell me how the hell can you select an edge loop (Alt+right clic should workc).For me all that’s hapening is a redimensionig of the window.Y’m using Mandriva 2006 and KDE ….Pleese help me.Y’m rather desprete.Y just want to dump that windows thing.O and…u guis are innnnncredible.Keep on with the good work

graphicflor:

you can just use shift-alt-rightclick in blender. it’s a bit stupid, other way is to change behaviour in KDE.

right click on window titlebar -> configure window behaviour -> actions -> window actions (tab).

there you can choose modifier key. we are using windows/meta key here at the office.

.b

Quote David: “BUT 2 screens are very usefull for trimming your edits and if you cant do a incoming/outgoing trim or a slip/slide trim (4 windows!) then you really are missing a very usefull tool.”

As said, in VegasVideo by Sony (but also in many other pro NLE e compositing tools, IE in about all Discreet stuff) the trimming tool monitor, is always one the same preview monitor. This workflow it justified for focusing only on one monitor. Anyway i think that in the future Blender that will include these outstanding NLE-capabilities listed here, the number of the monitor preview can be customized.

I agree that one monitor is fine for most stuff, including setting original in/out points. But I also agree with two being being helpful in dragging the edit point between two clips and slip/slide operations. In the former, you’re changing the out of the first video and the in of the second at once, while in the latter you’re changing both the in and out of one clip at the same time. In those cases, having two monitors is helpful to be sure you haven’t gone too far as you drag.

But I’m sure that’ll come eventually anyway. Just having stuff like slip/slide in the first place regardless of monitors would be a huge step.. :)

My main concern is just having a half-way decent free editor on windows. I am active in the online yo-yo community where many individuals and clubs around the world share edited videos online all the time. Most of those people either use Windows Movie Maker or pirate programs like Premiere. Having a good cross-platform open source NLE would be a great resource for many applications outside of 3D.

I was a bit shocked when I looked around for any free editors on Windows outside of WMM that would work to edit with music and didn’t crash all the time. I couldn’t find a single one that I considered usable. I was able to script Avisynth for a recent project, but that only worked because I wasn’t editing to music and because I can program.

Keep up the good work guys. Blender is really getting amazing!

Hi,

just wanted to mention, my patch also adds a channel tuner

(the generic way of having two or more monitors…).

I had 3 camcorder sources to fade against each others.

This means: you have 3 monitors for the source streams and one

large output monitor. Absolutely no problem with the patch

I made.

Hey, it should be far better than Avid and Premiere, right ;-)

You can even do sensible color correction with the patch,

since the latest version includes a UV-Scatter-Plot and a

Luma-Waveform preview mode. (Usable with several channels

at once… )

Greetings,

Peter

whee, just tried it.. awesome, great work Peter.

I should mention that the Avid also allows you to open as many source windows as you like (not just the one attached to the Master screen).

And Peter, that sounds COOL! Keep up the ridiculously free work and know that lots of people admire you and all the the other coders for it.

D

My jaws on the floor!!!

You are masters!

Some cool stuff you all got going on there. Blender Rocks.

Thank you to all the developers, contributors, and the community.

very impresive stuff. finally a free desent compositor.

I’ve started a topic on the comp module on elsyun forum giving my first thoughts and tests on the comp module.

http://www.elysiun.com/forum/viewtopic.php?t=60607

first thanks great effort you do.

any idea when that node based compositor will be available?

torax, that’s a pretty impressive setup! pete, you can check it out from cvs today, or get a testing build for your platform…. of course, what you get is wip stuff, but already pretty nice. I’ve no idea when the next release is- I presume sometime after elephants dream is finished.

Is the node based compositor embeded into blender with a hidden switch? Or will it be seperate. I believe the ability to do both is what makes houdini such a killer app.

How can i beta test this compositor. I have been a tester for Shake, Nuke, Fusion, Flame, Toxik, Mistika, Piranha and others i cant even name… none of them excite me as much as an open source project.

Can someone contact me at [email protected] with any real information?

chris, the node based compositor is in blender indeed, and quite open to anyone to test; either check out a fresh cvs, or download a testing build from the testing build forum..

http://blender.org/forum/viewforum.php?f=18&sid=d1e495bb2b1eed0e5a8257005a2bd1a6

that suits your os… and have fun!

Reguarding those who were asking how to let multiple programs access OSS dsp, would using artsdsp or esddsp work?

E.g.

artsdsp blender -w

or

esddsp blender -w

Color me awed. You are adding features that make love.

This is *so* great!

I was planning to get Fusion5 but now I’m not sure..

Is there some place where I could get info about included/planned compositing functionality? Like available nodes and so?

And about OSS, at least FreeBSD does realtime kernel mixing of multiple sources. And then there is 4Front Technologies and their drivers for many systems, like Linux (currently without charge, I think):

http://www.opensound.com/

Hi Bassam, how can I contact you directly? Do you have an email that I can write to?